My last post uses random forest proximity to visualize a set of diamond shapes (the random forest is trained to distinguish diamonds from non-diamonds).

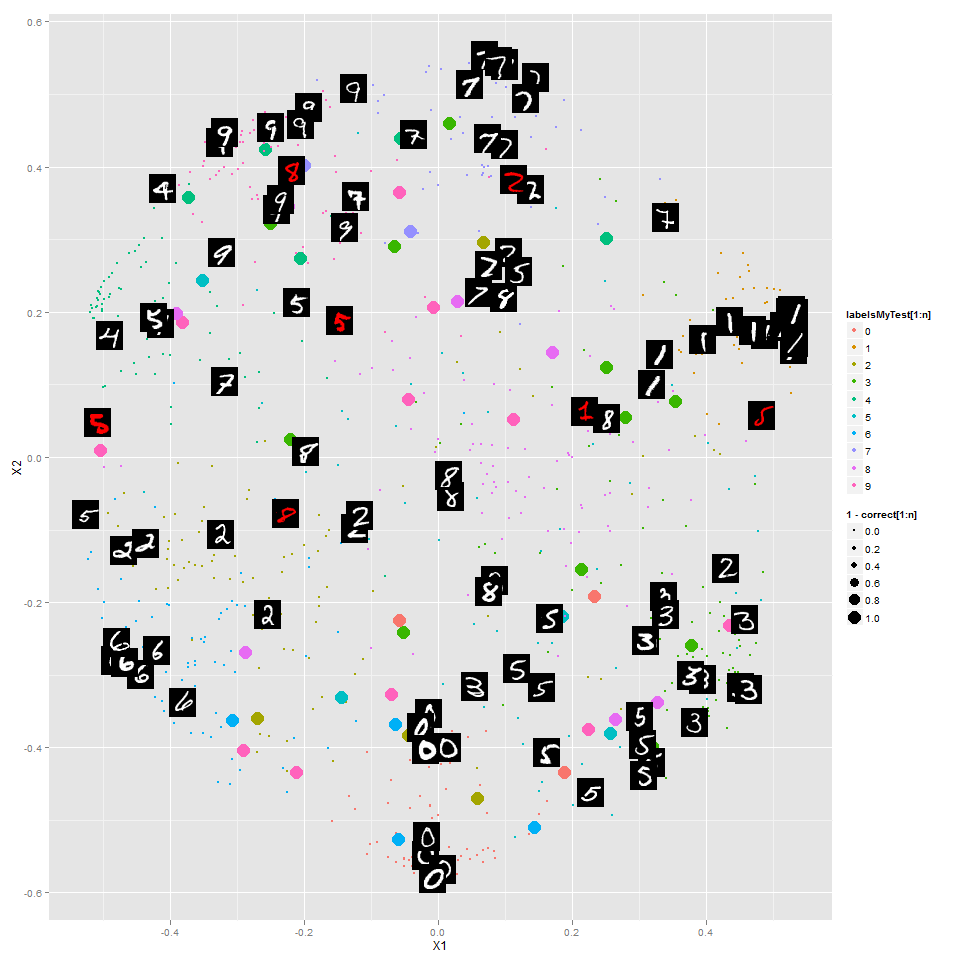

This time I looked at the digits data set that Kaggle is using as the basis of a competition for "getting started". The random forest is trained to classify the digits, and this is an embedding of 1000 digits into 2 dimensions preserving proximities from the random forest as closely as possible:

The colors of the points show the correct label. The larger points are digits classified incorrectly, and you can see that in general those are ones that the random forest has put in the wrong "region". I've shown some of the digits themselves (instead of colored points) -- the red ones are incorrectly classified.

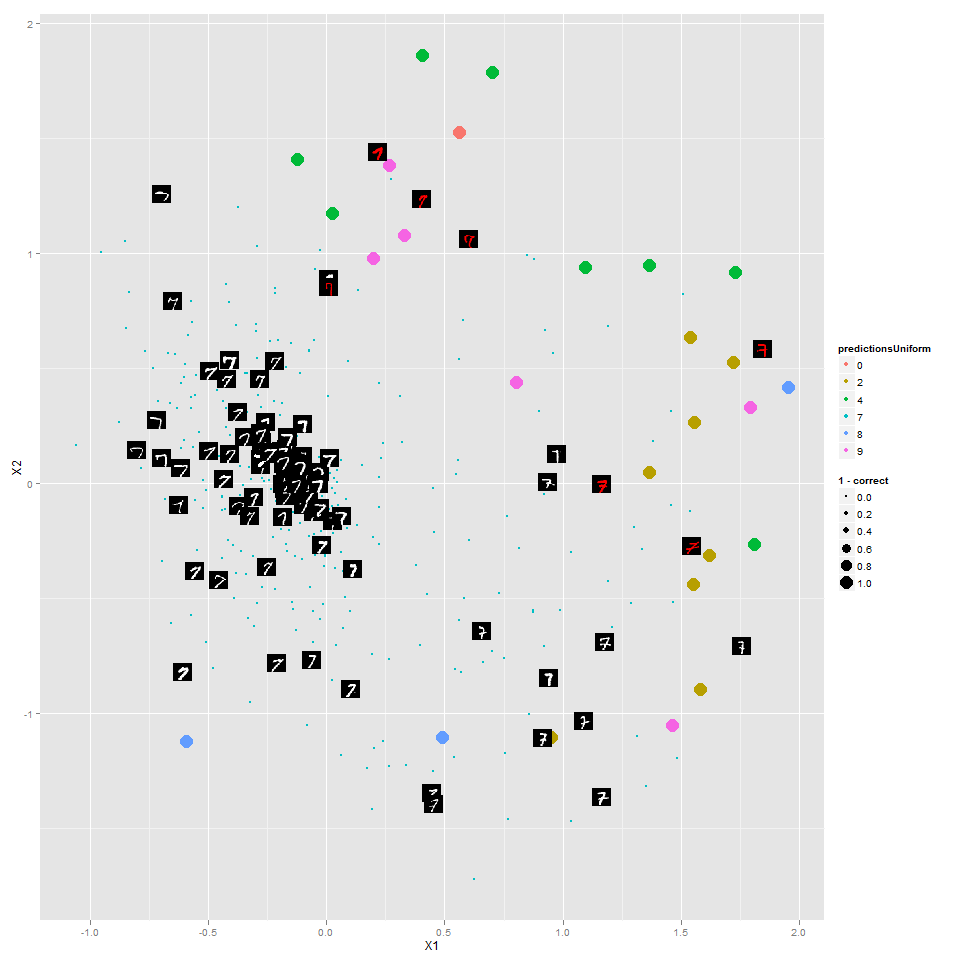

Here's the same but just for the 7's:

The random forest has done a reasonable job putting different types of 7's in different areas, with the most "canonical" 7's toward the middle.

You can see all of the other digits http://www.learnfromdata.com/media/blog/digits/.

Note that this random forest is different from the one in my last post -- here it's built to classify the digits, not separate digits from non-digits. I wonder what kind of results a random forest to distinguish 7's from non-7's would look like?

Code is on Github.

Sunday, August 5, 2012

Random forests for visualizing data

Recently I read about using random forests as a way to visualize data. Here's how it works:

- Have a data set

- Create a set of fake data by permuting the columns randomly -- each column will still have the same distribution, but the relationships are destroyed.

- Train a random forest to distinguish the fake data from the original data.

- Get a "proximity" measure between points, based on how often the points are in the same leaf node.

- Embed the points in 2D in such a way as to distort these proximities as little as possible.

I decided to try this in a case where I would know what the outcome should be, as a way of thinking about how it works. So I generated 931 images of diamonds varying in two dimensions:

- Size

- Position (only how far left/right)

Then I followed the above procedure, getting this:

Neat! The random forest even picked up on a feature of this space that I wasn't expecting it to: for the same difference in position, small diamonds need to be closer to each other than large diamonds. None of my diamonds have a diameter smaller than 4 pixels, but imagine of the sizes got so small the diamond wasn't even there -- then position wouldn't matter at all of those diamonds.

I set one column of pixels to random values, and the method still worked just as well. (Which makes sense, as the random forest only cares about pixels that help it distinguish between diamonds and non-diamonds.)

A cool technique that I'd love to try some more! For one, I'd like to understand better how it differs from various manifold learning methods. One nice feature is that you could easily use this with a mix of continuous and categorical variables.

Note that starting with Euclidean distance between images (as vectors in R^2500) and mapping points to 2D doesn't seem to produce anything useful:

Code available on github.

Subscribe to:

Comments (Atom)